Data Science Setup

GPU Setup for Deep Learning

The following are some highly rated suggestions. CPU should be sufficient for some of the excercises, but it is good practice to set up an NVIDIA GPU/CUDA/cuDNN environment at least once to know how to do this when the datasets get bigger.

Getting your Environment Set Up

Hardware

A decade ago a study was done using molecular dynamics simulations where, even then, they saw significant speed-ups by using an NVIDIA GPU (Graphics Processing Unit), speed-ups of up to 25x programming in the CUDA (compute unified device architecture) language:

Their computational power exceeds that of the CPU by orders of magnitude: while a conventional CPU has a peak performance of around 20 Gigaflops, a NVIDIA GeForce 8800 Ultra reaches theoretically 500 Gigaflops.

J.A. van Meel et al. arXiv. 20 Sept. 2007

https://arxiv.org/PS_cache/arxiv/pdf/0709/0709.3225v1.pdf

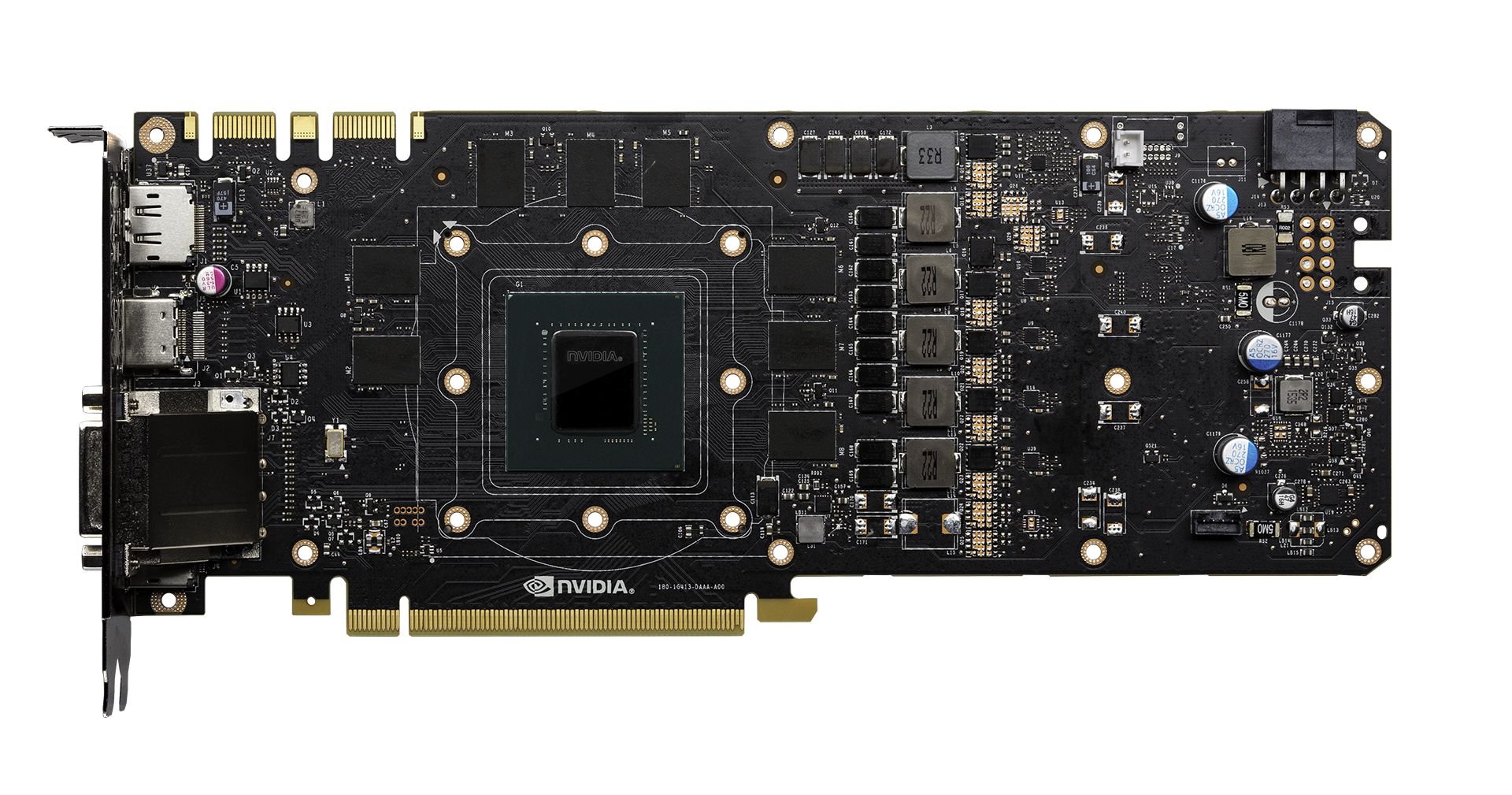

Nowadays, a standard choice is the NVIDIA GTX 1080 for deep learning as well as bitcoin mining, processing at speeds as high as 8,873 GFLOPS.

Another advantage of GPUs is that they use a fraction of the power that their CPU counterparts use.

Thus accepted options for training deep learning models (in Python even!) are GPU(s) or TPU(s). Other hardware options include ASIC chips.

Computer-Laptop

Anything with a NVIDIA GTX 1060 (GPU) is good if you travel a lot or cannot necessarily depend on wifi.

- E.g.: https://www.razerzone.com/gaming-systems/razer-blade-pro - plan to get a 1060 w/ 256ssd + 2tb spinner

- GTX 1080 (extra $2k; only get if this is your primary machine and you travel a lot/have spotty wifi).

Computer-Desktop

Any gaming desktop with a min GTX 1080.

- Option 1: Custom built. If you go this route -> Add up your GPU RAM, multiply by 2 for your min RAM. Get a CPU w/ 48 lanes (so you can go 2 GPUs later).

- Option 2: https://lambdal.com/products/quad (this is probably over kill honestly, and your electricity bill might double. Will make a great space heater)

Computer-Remote

- Option 1. Paperspace. This is the easiest option. Go to https://www.paperspace.com/console and sign up for an account.

- There are monthly fee plus small GPU Jupyter notebook usage fees for the Gradient 1 option.

- Instructions: Choose Gradient option -> Notebooks -> Paperspace + Fast.AI 0.7.x -> P4000 -> Create Notebook (this will spin up a Jupyter Notebook system, yes its for fast.ai, but it’s a well-set-up option, backed by an NVIDIA GPU and CUDA/cuDNN).

- Once you are in the notebook environment, either start a terminal or type in a notebook cell

! pip install torch_nightly -f https://download.pytorch.org/whl/nightly/cu92/torch_nightly.html(the!is just a way to escape into the OS from a jupyter notebook) - Make sure to stop the notebook server when not using it to avoid incurring unnecessary fees.

- Option 2. Use Azure (if you have or want an Azure subscription) and set up a Jupyter notebook system. This is the more flexible option as you can scale the VM to your choosing, login to the Desktop, or interface through SSH. You may also add as much extra storage as you need for datasets and whatnot.

- It’s suggested to provision a new NC-6 (or NC12) (v3) Linux (Ubuntu) Deep Learning Virtual Machine, updating everything, installing the packages, adding a 1TB HDD data disk (add disk to the DSVM) and kicking off a password protected Jupyter Notebook in a tmux session. DO NOT put sensitive data on this. It has open ports, admin rights to the system, is password protected only and it’s not believed to use SSL unless you set up a certificate. There are other more secure options, though they are more advanced and not covered in this getting started.

- A nice getting started video for provisioning a DSVM for Jupyter can be found here: Video.

- Stop the VM in the Azure Portal when not using it to avoid incurring unnecessary fees.

IoT-Test_Device (For Inference)

Raspberry Pi v3, Nvidia TX-1, Nvidia TX-2 or Xavier if you have the funds. If you are feeling adventurous get a few arduinos as well.

Software

- Docker for Mac or Docker for Windows (avoid Toolbox)

- Anaconda

- If you have a GPU

- Go to CUDA Downloads

- Join Nvidia Developer program

- Update your GPU drivers

- Install CUDA & cuDNN

- Install your packages

Additional Resources

- StackOverflow account

- The Stats/ML StackExchange is a useful place to pose questions about ML and stats Link

- How to calculate peak theoretical performance of a CPU-based HPC system

Important additional libraries:

- Jupyter - Ref

-

PyTorch - Ref

- Packages (place in a

requirements.txtfile in your working conda environment and install all at once with:pip install -r requirements.txt):

jupyter

scikit-learn

scikit-image

seaborn

torch==0.4.1

matplotlib

numpy

Pillow

opencv-python